A View Of A Leaf Variable That Requires Grad Is Being Used In An In-place Operation / Runtimeerror A View Of A Leaf Variable That Requires Grad Is Being Used In An In Place Operation Issue 2408 Ultralytics Yolov5 Github

A view of a leaf Variable that requires grad is being used in an in-place operation message is generated in the training phase of the model. A view of a leaf Variable that requires grad is being used in an in-place operation when running on Docker 1552 Closed NanoCode012 opened this issue Nov 29 2020.

Weights 0 In Place Operations Part 1 2020 Deep Learning Course Forums

Why is it wrong to change a leaf variable with.

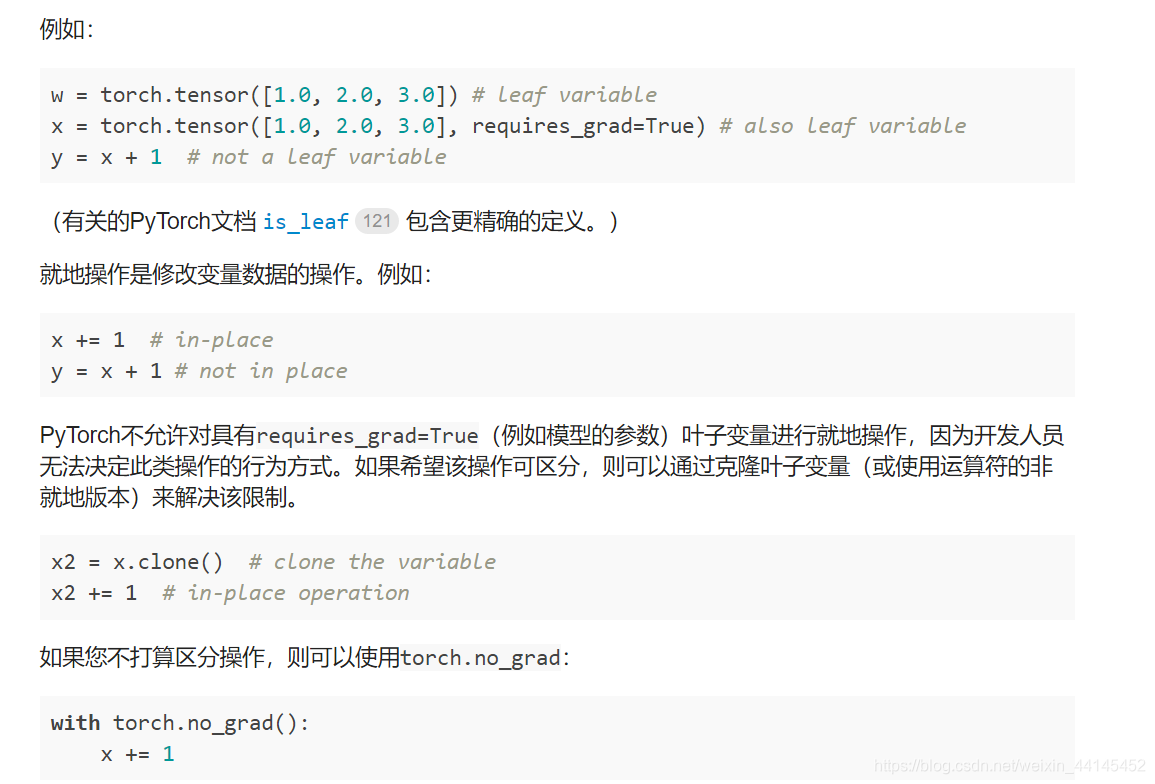

A view of a leaf variable that requires grad is being used in an in-place operation. 在 pytorch 中 有两种情况不能使用 inplace operation. A leaf Variable that requires grad has been used in an in-place operation. Enable no-grad mode when you need to perform operations that should not be recorded by autograd but youd still like to use the outputs of these computations in.

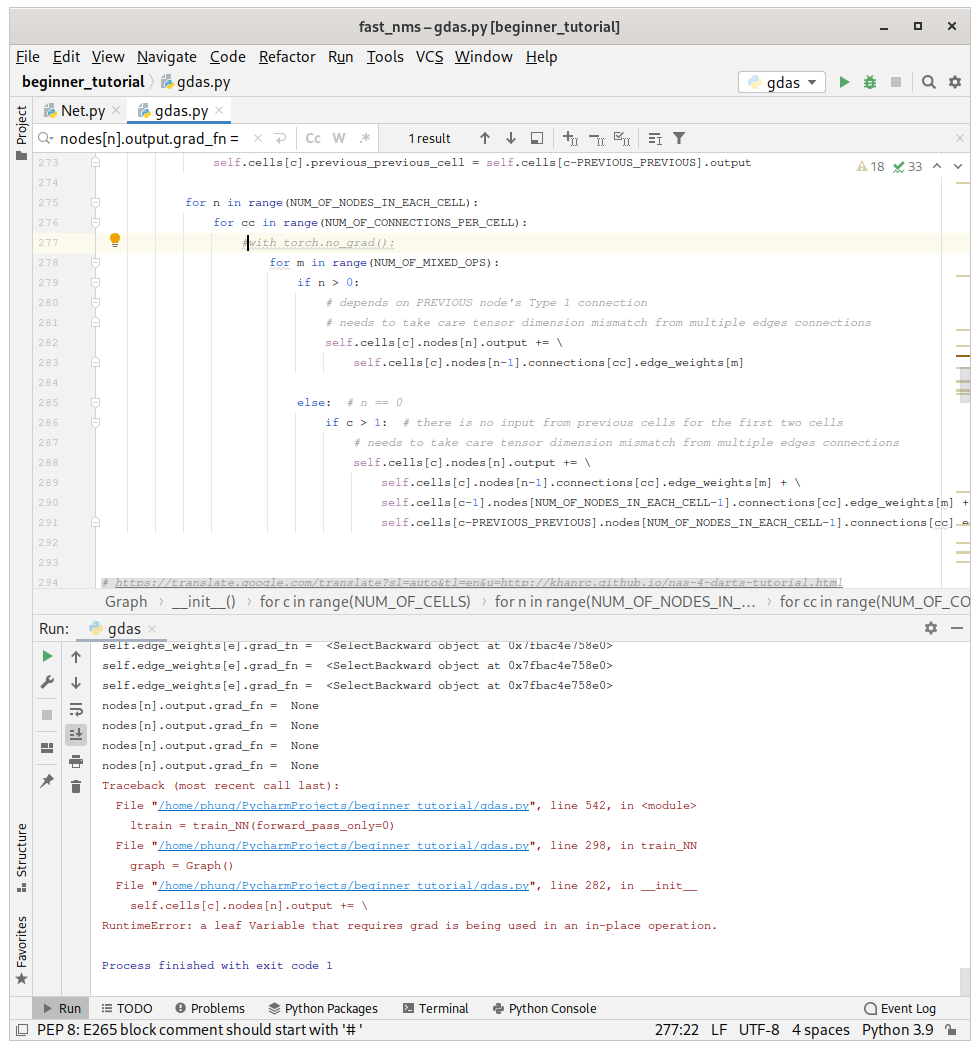

As I understand this issue is generated in the initialize_biases function in modelsyolopy line 150. A view of a leaf Variable that requires grad is being used in an in-place operation. Pytorch 写的好好的代码运行到某一块突然显示 a leaf Variable that requires grad has been used in an in-place operation 这个bug的意思是 需要梯度的叶子变量被用在了原位操作里 指的是 如果是原位操作就会覆盖原来的值 因为并不是计算过程是赋值梯度是多少这个没有一个定义或许是一个跳变过程梯度无穷大 所以.

此时如果是写y2是可以的也就是说torch变量带 requires_grad 的 不能进行操作. 上一篇 未找到导入的项目CProgram Files x86Microsoft Visual Studio2017EnterpriseCommon7IDEVCVCT 下一篇 OMP. User 289 ms sys.

Thank you for any help. A view of a leaf Variable that requires grad is being used in an in-place operation修改代码如下def_initialize_biasesselfcfNone. Initializing libiomp5mddll but found libiomp5mddll already initialized.

FloatTensor 10 w 是个 leaf tensor w. 改为y x 2. Requires_grad True 将 requires_grad 设置为 True w.

A leaf Variable that requires grad has been used in an in-place operation. Requires_gradTrue 的 leaf tensor. A view of a leaf Variable that requires grad is being used in an in-place operation.

395 ms Wall time. A leaf Variable that requires grad is being used in an in-place operation. X Variable torchones 22requires_gradTrue x2.

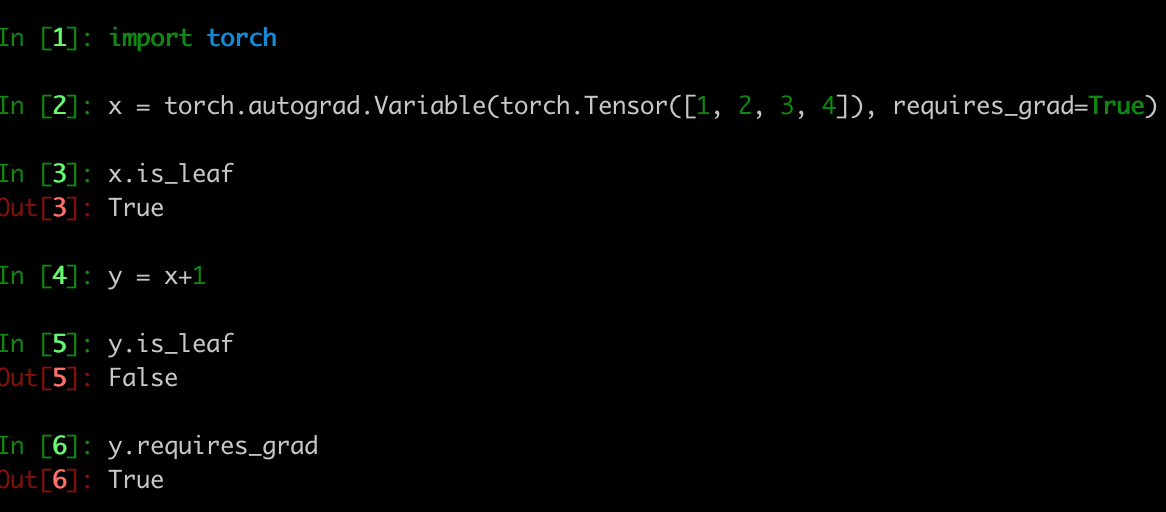

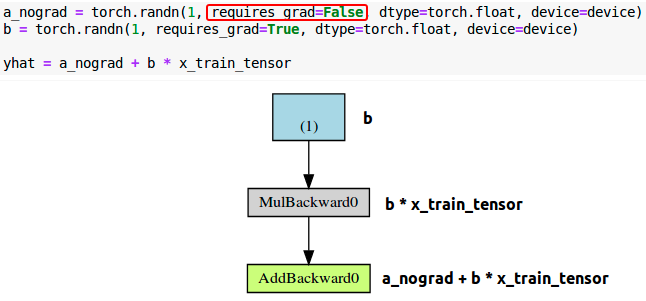

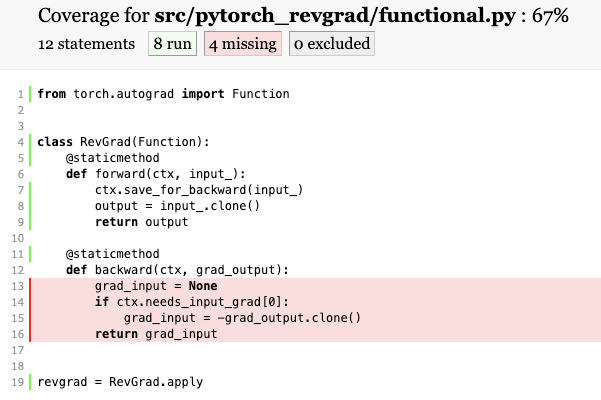

In order to enable automatic differentiation PyTorch keeps track of all operations involving tensors for which the gradient may need to be computed ie require_grad is True. In other words computations in no-grad mode are never recorded in the backward graph even if there are inputs that have require_gradTrue. The culprit is PyTorchs ability to build a dynamic computation graph from every Python operation that involves any gradient-computing tensor or its dependencies.

Requires_gradTrue 的 leaf tensor import torch w torch. Import numpy as np. A leaf Variable that requires grad has.

Normal_ 在执行这句话就会报错 报错信息为 RuntimeError. A view of a leaf Variable that requires grad is being used in an in-place operation. 对于 requires_gradTrue 的 叶子张量leaf tensor 不能使用 inplace operation.

Computations in no-grad mode behave as if none of the inputs require grad. A view of a leaf Variable that requires grad is being used in an in-place operation. SECOND ATTEMPT RuntimeError.

Gadget Ben March 18 2021 857pm 2. X torchones22 requires_gradTrue xadd_1 I will get the error. What I dont understand is the philosophy behind this rule.

The operations are recorded as a directed graph. The detach method constructs a new view on a tensor which is declared not to need gradients ie it is to be excluded from further tracking of operations and therefore the. A leaf Variable that requires grad has been used in an in-place operation 这个是因为写成了x2 改为y x 2 此时如果是写y2是可以的也就是说torch变量带requires_grad 的不能进行操作 import numpy as np import torch from.

Pytorch 写的好好的代码运行到某一块突然显示 a leaf Variable that requires grad has been used in an in-place operation 这个bug的意思是 需要梯度的叶子变量被用在了原位操作里 指的是 如果是原位操作就会覆盖原来的值 因为并不是计算过程是赋值梯度是多少这个没有一个定义或许是一个跳变过程梯度无穷大 所以. A view of a leaf Variable that requires grad is being used in an in-place operation. I understand that Pytorch does not allow inplace operations on leaf variables and I also know that there are ways to get around this restrictions.

When running the default Google Colab notebook a RuntimeError. From torchautograd import Variable. Pytorch 写的好好的代码运行到某一块突然显示 a leaf Variable that requires grad has been used in an in-place operation 这个bug的意思是 需要梯度的叶子变量被用在了原位操作里 指的是 如果是原位操作就会覆盖原来的值 因为并不是计算过程是赋值梯度是多少这个没有一个定义或许是一个跳变过程梯度无穷大 所以.

It turns out to be a case of too much of a good thing. A view of a leaf Variable that requires grad is being used in an in-place operation.

Runtimeerror A View Of A Leaf Variable That Requires Grad Is Being Used In An In Place Operation Issue 2408 Ultralytics Yolov5 Github

Understanding Pytorch With An Example A Step By Step Tutorial By Daniel Godoy Towards Data Science

Pytorch Autograd Explained Kaggle

Runtimeerror A View Of A Leaf Variable That Requires Grad Is Being Used In An In Place Operation Issue 2408 Ultralytics Yolov5 Github

Understanding The Error A Leaf Variable That Requires Grad Is Being Used In An In Place Operation By Mrityu Sep 2021 Medium

Pytorch Leaf Variable Handling Update Issue 18 Source Separation Tutorial Github

Leaf Variable Was Used In An Inplace Operation 21 By Technetium 43 Pytorch Forums

Runtimeerror A View Of A Leaf Variable That Requires Grad Is Being Used In An In Place Operation Issue 2408 Ultralytics Yolov5 Github

Runtimeerror A View Of A Leaf Variable That Requires Grad Is Being Used In An In Place Operation Issue 34 Quva Lab E2cnn Github

Understanding Pytorch With An Example A Step By Step Tutorial By Daniel Godoy Towards Data Science

Training Loss Stays The Same Pytorch Forums

Runtimeerror A View Of A Leaf Variable That Requires Grad Is Being Used In An In Place Operation Issue 2408 Ultralytics Yolov5 Github

Runtimeerror A View Of A Leaf Variable That Requires Grad Is Being Used In An In Place Operation Issue 2408 Ultralytics Yolov5 Github

Runtimeerror A View Of A Leaf Variable That Requires Grad Is Being Used In An In Place Operation When Running On Docker Issue 1552 Ultralytics Yolov5 Github

Runtimeerror A View Of A Leaf Variable That Requires Grad Is Being Used In An In Place Operation When Running On Docker Issue 1552 Ultralytics Yolov5 Github

Runtimeerror A View Of A Leaf Variable That Requires Grad Is Being Used In An In Place Operation Issue 2408 Ultralytics Yolov5 Github

Runtimeerror Output 0 Of A Function Created In No Grad Mode Is A View And Is Being Modified Inplace 落雪wink的博客 Csdn博客

Custom Autograd Function Backward Pass Not Called Autograd Pytorch Forums

Post a Comment

Post a Comment